The problem

GPUs are getting more and more memory and compute capability nowadays. This is super good news if you are using them for HPC (high-performance computing), but some workloads may only require a fraction of a card.

Let’s say you are training a Tensorflow model that cannot utilize the whole GPU at once, or your training has some parts that do not use the GPU, leading it to be a wasted resource until the CPU task finishes. In a case like this, the GPU is not optimally utilized, so we may want to run multiple workloads on the same hardware.

This is the point when GPU orchestration comes in the picture.

Introduction

Many of the following examples rely on Docker Swarm, which is not the most sophisticated way of doing Docker nowadays, but it’s easier to hop onto. Nonetheless, the same problems apply to Kubernetes. Both use the same underlying technology, namely nvidia-docker.

This also means, everything from this point only applies to NVIDIA GPUs, because we will use CUDA + Tensorflow, as many of us do in practice. Tensorflow and deep neural network are not subjects of this article, only examples to point out the problems and solutions with.

Our solution: GPU orchestration using Docker

Without any knowledge of GPU orchestration, I first started to delve into the documentation of Kubernetes and Docker Swarm to see if there was an “off-the-shelf” solution to the problem. Sadly, I haven’t found anything regarding this.

The first lead I got was docker-nvidia, which is a runtime that kindly mounts the underlying Nvidia driver to our container. This means if the driver is up to date, we are not bound to the CUDA installed on the machine it will be running on. Each container can have its own version depending on our application (but the driver needs to support the version).

If this is installed on a node, GPU utilization becomes easy from docker containers. Either it can be set as the default runtime, or as it is more secure, for each container run, it can be specified via the --runtime argument.

Here is the hello world example from nvidia-docker:

docker run --runtime=nvidia --rm nvidia/cuda:9.0-base nvidia-smiThis command should call nvidia-smi. If it runs successfully, it means the driver is successfully reachable from inside the container.

Orchestration of resources

The biggest problem is while CPU and memory as a resource can be constrained for each container, it is not the case by default with GPU. However, there are workarounds for this problem.

One solution is using generic resources, which are included in docker. The other is implementing a custom GPU orchestration logic. Both have their pros and cons. While one offloads the GPU orchestration difficulties from our shoulders, the other gives us more flexibility.

The generic resource approach

Depending on the use case, if the workload only requires having full GPUs per application, this isn’t a big help, but if we want to use a single GPU with multiple applications at the same time, this really comes in handy. We can name our GPU resource `VRAM`, because this is the only thing we can take portions out of at the moment using GPUs.

On Debian-based machines, this can be achieved most easily on a node by editing docker startup settings: sudo systemctl edit docker (check this readme for a more sophisticated way).

Add the following lines (if it was empty before):

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd -H unix:///var/run/docker.sock --node-generic-resource VRAM=8000It sets the usable VRAM to be 8000 (this does not mean we cannot go over this bound within the application level; more on this later).

This allows us to create services within a swarm using our custom constraints (depending on how much VRAM is needed):

docker service create --generic-resource VRAM=4000 nvidia/cuda

This will nicely orchestrate services to nodes where there is enough resource available, one after the other. In this case, after starting this service, the node would still have 4000MB of memory to play with.

Sadly, the docker approach only constrains the placement of the container but does give the same resource to every container within a node, not just a virtual GPU with the fraction of the `real` GPU. In order to use a fraction of the GPU within the application, Tensorflow enables per process memory fraction setting. This can be achieved by setting the config of the session (in this case to use max 50% of the VRAM):

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = 0.5

session = tf.Session(config=config, ...)The fraction needs to be calculated on application start, if we are not using the same hardware for each node (in that case, we can use predefined constants for ease). There is one more problem though.

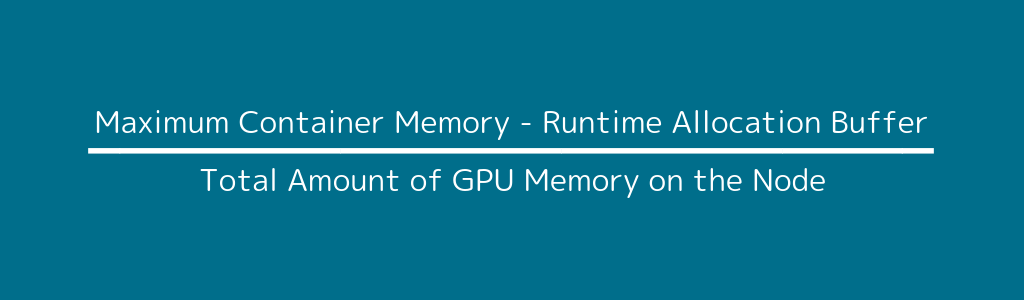

When I started experimenting with this approach, I thought it would be sufficient to call nvidia-smi on application startup, to get the total amount of memory and to give the service/container the maximum memory in megabytes to use. This would give us everything to calculate the correct fraction, which does set the maximum memory to be used by Tensorflow.

But the CUDA runtime will also allocate memory itself. This means that depending on the application, it can take up to 200 to 500MB of VRAM on top of the fraction calculated. The right approach to this is to run the code on a local or test machine without any orchestration in mind, and to set the GPU options to allocate only the memory needed during runtime. In Tensorflow, this can be done by:

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

session = tf.Session(config=config, ...)Using this approach and nvidia utils, the peak memory usage can be calculated per application. The fraction should be calculated by:

Where the first two can be injected by the job runner into the service/container via environment variables. In order to keep the application memory usage in sync with the docker generic resource, I suggest using MAX_CONTAINER_MEMORY for both settings.

Job runner notes

Now that the prerequisites are in place, the only thing left is to create jobs that utilize GPU resources.

Training a Tensorflow model or running a calculation/simulation would result in running an application once with different kinds of parameters per job. We call these one-shot jobs.

In order to achieve something like this, the docker swarm needs some help to at least clean up finished jobs externally. While a service can be started using docker service create --restart-condition none ..., it will leave the finished or failed job behind.

In order to remove these services, a clean-up mechanism should be implemented that only removes services after they are finished, whereas ‘queued’ services will be pending until they get into a node where there is enough resource available.

Conclusion

While this solution is not suitable for every use case, I hope you can use my findings and workarounds for great use. While GPUs are fully utilized in most of the cases, with hardware getting stronger and stronger each year, I think this topic will be more and more relevant. Therefore, some more sophisticated options will occur. Until that day comes, these are the options for having optimal GPU use in Docker today.

Image by JacekAbramowicz on Pixabay